Turning User Research Into Real Organizational Change

Turning User Research Into Real Organizational Change Turning User Research Into Real Organizational Change Paul Boag 2025-07-01T10:00:00+00:00 2025-07-02T15:03:33+00:00 This article is sponsored by Lyssna We’ve all been there: you pour your heart and soul into conducting meticulous user research. You gather insightful data, create detailed […]

Accessibility

Can Good UX Protect Older Users From Digital Scams?

Can Good UX Protect Older Users From Digital Scams? Can Good UX Protect Older Users From Digital Scams? Carrie Webster 2025-06-25T12:00:00+00:00 2025-06-25T15:04:30+00:00 A few years ago, my mum, who is in her 80s and not tech-savvy, almost got scammed. She received an email from what […]

Accessibility

Meet Accessible UX Research, A Brand-New Smashing Book

Meet Accessible UX Research, A Brand-New Smashing Book Meet Accessible UX Research, A Brand-New Smashing Book Vitaly Friedman 2025-06-20T16:00:00+00:00 2025-06-25T15:04:30+00:00 UX research can take so much of the guesswork out of the design process! But it’s easy to forget just how different people are and […]

Accessibility

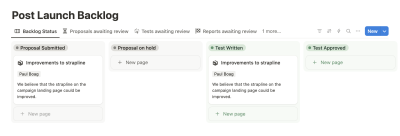

Turning User Research Into Real Organizational Change

Turning User Research Into Real Organizational Change Turning User Research Into Real Organizational Change Paul Boag 2025-07-01T10:00:00+00:00 2025-07-02T15:03:33+00:00 This article is sponsored by Lyssna We’ve all been there: you pour your heart and soul into conducting meticulous user research. You gather insightful data, create detailed […]

Accessibility

Turning User Research Into Real Organizational Change

Paul Boag 2025-07-01T10:00:00+00:00

2025-07-02T15:03:33+00:00

This article is sponsored by Lyssna

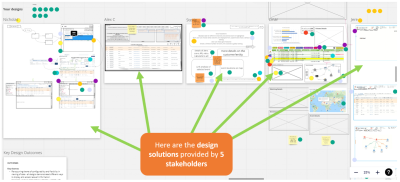

We’ve all been there: you pour your heart and soul into conducting meticulous user research. You gather insightful data, create detailed reports, and confidently deliver your findings. Yet, months later, little has changed. Your research sits idle on someone’s desk, gathering digital dust. It feels frustrating, like carefully preparing a fantastic meal, only to have it left uneaten.

There are so many useful tools (like Lysnna) to help us run incredible user research, and articles about how to get the most from them. However, there’s much less guidance about ensuring our user research gets adopted and brings about real change. So, in this post, I want to answer a simple question: How can you make sure your user research truly transforms your organization?

Introduction

User research is only as valuable as the impact it has.

When research insights fail to make their way into decisions, teams miss out on opportunities to improve products, experiences, and ultimately, business results. In this post, we’ll look at:

- Why research often fails to influence organizational change;

- How to ensure strategic alignment so research matters from day one;

- Ways to communicate insights clearly so stakeholders stay engaged;

- How to overcome practical implementation barriers;

- Strategies for realigning policies and culture to support research-driven changes.

By covering each of these areas, you’ll have a clear roadmap for turning your hard-won research into genuine action.

Typical Reasons For Failure

If you’ve ever felt your research get stuck, it probably came down to one (or more) of these issues.

Strategic Misalignment

When findings aren’t tied to business objectives or ROI, they struggle to gain traction. Sharing a particular hurdle that users face will fall on deaf ears if stakeholders cannot see how that problem will impact their bottom line.

Research arriving too late is another hurdle. If you share insights after key decisions are made, stakeholders assume your input won’t change anything. Finally, research often competes with other priorities. Teams might have limited resources and focus on urgent deadlines rather than long-term user improvements.

Communication Issues

Even brilliant research can get lost in translation if it’s buried in dense reports. I’ve seen stakeholders glaze over when handed 30-page documents full of jargon. When key takeaways aren’t crystal clear, decision-makers can’t quickly act on your findings.

Organizational silos can make communication worse. Marketing might have valuable insights that product managers never see, or designers may share findings that customer support doesn’t know how to use. Without a way to bridge those gaps, research lives in a vacuum.

Implementation Challenges

Great insights require a champion. Without a clear owner, research often lives with the person who ran it, and no one else feels responsible. Stakeholder skepticism also plays a role. Some teams doubt the methods or worry the findings don’t apply to real customers.

Even if there is momentum, insufficient follow-up or progress tracking can stall things. I’ve heard teams say, “We started down that path but ran out of time.” Without regular check-ins, good ideas fade away.

Policy And Cultural Barriers

Legal, compliance, or tech constraints can limit what you propose. I once suggested a redesign to comply with new accessibility standards, but the existing technical stack couldn’t support it. Resistance due to established culture is also common. If a company’s used to launching fast and iterating later, they might see research-driven change as slowing them down.

Now that we understand what stands in the way of effective research implementation, let’s explore practical solutions to overcome these challenges and drive real organizational change.

Ensuring Strategic Alignment

When research ties directly to business goals, it becomes impossible to ignore. Here’s how to do it.

Early Stakeholder Engagement

Invite key decision-makers into the research planning phase. I like to host a kickoff session where we map research objectives to specific KPIs, like increasing conversions by 10% or reducing support tickets by 20%. When your stakeholders help shape those objectives, they’re more invested in the results.

Research Objectives Aligned With Business KPIs

While UX designers often focus on user metrics like satisfaction scores or task completion rates, it’s crucial to connect our research to business outcomes that matter to stakeholders. Start by identifying the key business metrics that will demonstrate the value of your research:

- Identify which metrics matter most to the organization (e.g., conversion rate, churn, average order value).

- Frame research questions to directly address those metrics.

- Make preliminary hypotheses about how insights may affect the bottom line.

Develop Stakeholder-Specific Value Propositions

When presenting user research to groups, it’s easy to fall into the trap of delivering a one-size-fits-all message that fails to truly resonate with anyone. Instead, we need to carefully consider how different stakeholders will receive and act on our findings.

The real power of user research emerges when we can connect our insights directly to what matters most for each specific audience:

- For the product team: Show how insights can reduce development time by eliminating guesswork.

- For marketing: Demonstrate how understanding user language can boost ad copy effectiveness.

- For executives: Highlight potential cost savings or revenue gains.

ROI Framework Development

Stakeholders want to see real numbers. Develop simple templates to estimate potential cost savings or revenue gains. For example, if you uncover a usability issue that’s causing a 5% drop-off in the signup flow, translate that into lost revenue per month.

I also recommend documenting success stories from similar projects within your own organization or from case studies. When a stakeholder sees that another company boosted revenue by 15% after addressing a UX flaw, they’re more likely to pay attention.

Research Pipeline Integration

Integrate research tasks directly into your product roadmap. Schedule user interviews or usability tests just before major feature sprints. That way, findings land at the right moment — when teams are making critical decisions.

Regular Touchpoints with Strategic Teams

It’s essential to maintain consistent communication with strategic teams through regular research review meetings. These sessions provide a dedicated space to discuss new insights and findings. To keep everyone aligned, stakeholders should have access to a shared calendar that clearly marks key research milestones. Using collaborative tools like Trello boards or shared calendars ensures the entire team stays informed about the research plan and progress.

Resource Optimization

Research doesn’t have to be a massive, months-long effort each time. Build modular research plans that can scale. If you need quick, early feedback, run a five-user usability test rather than a full survey. For deeper analysis, you can add more participants later.

Addressing Communication Issues

Making research understandable is almost as important as the research itself. Let’s explore how to share insights so they stick.

Create Research One-Pagers

Condense key findings into a scannable one-pager. No more than a single sheet. Start with a brief summary of the problem, then highlight three to five top takeaways. Use bold headings and visual elements (charts, icons) to draw attention.

Implement Progressive Disclosure

Avoid dumping all details at once. Start with a high-level executive summary that anyone can read in 30 seconds. Then, link to a more detailed section for folks who want the full methodology or raw data. This layered approach helps different stakeholders absorb information at their own pace.

Use Visual Storytelling

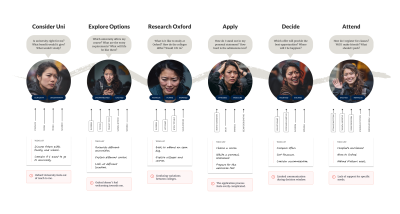

Humans are wired to respond to stories. Transform data into a narrative by using journey maps, before/after scenarios, and user stories. For example, illustrate how a user feels at each step of a signup process, then show how proposed changes could improve their experience.

Regular Stakeholder Updates

Keep the conversation going. Schedule brief weekly or biweekly “research highlights” emails or meetings. These should be no more than five minutes and focus on one or two new insights. When stakeholders hear snippets of progress regularly, research stays top of mind.

Interactive Presentations

Take research readouts beyond slide decks. Host workshop-style sessions where stakeholders engage with findings hands-on. For instance, break them into small groups to discuss a specific persona and brainstorm solutions. When people physically interact with research (sticky notes, printed journey maps), they internalize it better.

Overcome Implementation Challenges

Now that stakeholders understand and value your research, let’s make sure they turn insights into action.

Establish Clear Ownership

Assign a dedicated owner for each major recommendation. Use a RACI matrix to clarify who’s Responsible, Accountable, Consulted, and Informed. I like to share a simple table listing each initiative, the person driving it, and key milestones.

When everyone knows who’s accountable, progress is more likely.

RACI Matrix Example

| Initiative | Responsible | Accountable | Consulted | Informed |

|---|---|---|---|---|

| Redesign Signup Flow | UX Lead | Product Manager | Engineering, Legal | Marketing, Support |

| Create One-Pager Templates | UX Researcher | Design Director | Stakeholder Team | All Departments |

Build Implementation Roadmaps

Break recommendations down into phases. For example,

- Phase 1: Quick usability tweaks (1–2 weeks).

- Phase 2: Prototype new design (3–4 weeks).

- Phase 3: Launch A/B test (2–3 weeks).

Each phase needs clear timelines, success metrics, and resources identified upfront.

Address Stakeholder Skepticism

Be transparent about your methods. Share your recruitment screeners, interview scripts, and a summary of analysis steps. Offer validation sessions where stakeholders can ask questions about how the data was collected and interpreted. When they understand the process, they trust the findings more.

Create Support Systems

Even when stakeholders agree, they need help executing. Establish mentorship or buddy programs where experienced researchers or designers guide implementation. Develop training materials, like short “how-to” guides on running usability tests or interpreting survey data. Set up feedback channels (Slack channels, shared docs) where teams can ask questions or share roadblocks.

Monitor And Track Progress

Establish regular progress reviews weekly or biweekly. Use dashboards to track metrics such as A/B test performance, error rates, or user satisfaction scores. Even a more complicated dashboard can be built using no-code tools and AI, so you no longer need to rely on developer support.

Realign Policies and Culture

Even the best strategic plans and communication tactics can stumble if policies and culture aren’t supportive. Here’s how to address systemic barriers.

Create a Policy Evolution Framework

First, audit existing policies for anything that blocks research-driven changes. Maybe your data security policy requires months of legal review before you can recruit participants. Document those barriers and work with legal or compliance teams to create flexible guidelines. Develop a process for policy exception requests — so if you need a faster path for a small study, you know how to get approval without massive delays.

Technical Infrastructure Adaptation

Technology can be a silent killer of good ideas. Before proposing changes, work with IT to understand current limitations. Document technical requirements clearly so teams know what’s feasible. Propose a phased approach to any necessary infrastructure updates. Start with small changes that have an immediate impact, then plan for larger upgrades over time.

Build Cultural Buy-In

Culture shift doesn’t happen overnight. Share quick wins and success stories from early adopters in your organization. Recognize and reward change pioneers. Send a team-wide shout-out when someone successfully implements a research-driven improvement. Create a champions network across departments, so each area has at least one advocate who can spread best practices and encourage others.

Develop a Change Management Strategy

Change management is about clear, consistent communication. Develop tailored communication plans for different stakeholder groups. For example, executives might get a one-page impact summary, while developers get technical documentation and staging environments to test new designs. Establish feedback channels so teams can voice concerns or suggestions. Finally, provide change management training for team leaders so they can guide their direct reports through transitions.

Measure Cultural Impact

Culture can be hard to quantify, but simple pulse surveys go a long way. Ask employees how they feel about recent changes and whether they are more confident using data to make decisions. Track employee engagement metrics like survey participation or forum activity in research channels. Monitor resistance patterns (e.g., repeated delays or rejections) and address the root causes proactively.

Conclusions

Transforming user research into organizational change requires a holistic approach. Here’s what matters most:

- Strategic Alignment: Involve stakeholders early, tie research to KPIs, and integrate research into decision cycles.

- Effective Communication: Use one-pagers, progressive disclosure, visual storytelling, regular updates, and interactive presentations to keep research alive.

- Implementation Frameworks: Assign clear ownership, build phased roadmaps, address skepticism, offer support systems, and track progress.

- Culture and Policy: Audit and update policies, adapt infrastructure gradually, foster cultural buy-in, and employ change management techniques.

When you bring all of these elements together, research stops being an isolated exercise and becomes a driving force for real, measurable improvements. Keep in mind:

- Early stakeholder engagement drives buy-in.

- Clear research-to-ROI frameworks get attention.

- Ongoing, digestible communication keeps momentum.

- Dedicated ownership and phased roadmaps prevent stalls.

- Policy flexibility and cultural support enable lasting change.

This is an iterative, ongoing process. Each success builds trust and opens doors for more ambitious research efforts. Be patient, stay persistent, and keep adapting. When your organization sees research as a core driver of decisions, you’ll know you’ve truly succeeded.

(yk, il)

Can Good UX Protect Older Users From Digital Scams?

Can Good UX Protect Older Users From Digital Scams? Can Good UX Protect Older Users From Digital Scams? Carrie Webster 2025-06-25T12:00:00+00:00 2025-06-25T15:04:30+00:00 A few years ago, my mum, who is in her 80s and not tech-savvy, almost got scammed. She received an email from what […]

Accessibility

Can Good UX Protect Older Users From Digital Scams?

Carrie Webster 2025-06-25T12:00:00+00:00

2025-06-25T15:04:30+00:00

A few years ago, my mum, who is in her 80s and not tech-savvy, almost got scammed. She received an email from what appeared to be her bank. It looked convincing, with a professional logo, clean formatting, and no obvious typos. The message said there was a suspicious charge on her account and presented a link asking her to “verify immediately.”

She wasn’t sure what to do. So she called me.

That hesitation saved her. The email was fake, and if she’d clicked on the link, she would’ve landed on a counterfeit login page, handing over her password details without knowing it.

That incident shook me. I design digital experiences for a living. And yet, someone I love almost got caught simply because a bad actor knew how to design well. That raised a question I haven’t stopped thinking about since: Can good UX protect people from online scams?

Quite apart from this incident, I see my Mum struggle with most apps on her phone. For example, navigating around her WhatsApp and YouTube apps seems to be very awkward for her. She is not used to accessing the standard app navigation at the bottom of the screen. What’s “intuitive” for many users is simply not understood by older, non-tech users.

Brief Overview Of How Scams Are Evolving Online

Online scams are becoming increasingly sophisticated, leveraging advanced technologies like artificial intelligence and deepfake videos to create more convincing yet fraudulent content. Scammers are also exploiting new digital platforms, including social media and messaging apps, to reach victims more directly and personally.

Phishing schemes have become more targeted, often using personal information taken from social media to craft customised attacks. Additionally, scammers are using crypto schemes and fake investment opportunities to lure those seeking quick financial gains, making online scams more convincing, diverse, and harder to detect.

The Rise In Fraud Targeting Older, Less Tech-savvy Users

In 2021, there were more than 90,000 older victims of fraud, according to the FBI. These cases resulted in US$1.7 billion in losses, a 74% increase compared with 2020. Even so, that may be a significant undercount since embarrassment or lack of awareness keeps some victims from reporting.

In Australia, the ACCC’s 2023 “Targeting Scams” report revealed that Australians aged 65 and over were the only age group to experience an increase in scam losses compared to the previous year. Their losses rose by 13.3% to $120 million, often following contact with scammers on social media platforms.

In the UK, nearly three in five (61%) people aged over 65 have been the target of fraud or a scam. On average, older people who have been scammed have lost nearly £4,000 each.

According to global consumer protection agencies, people over 60 are more likely to lose money to online scams than any other group. That’s a glaring sign: we need to rethink how we’re designing experiences for them.

Older users are disproportionately targeted by scammers for several reasons:

- They’re perceived as having more savings or assets.

- They’re less likely to be digital natives, so they may not spot the red flags others do.

- They tend to trust authority figures and brands, especially when messages appear “official.”

Scammers exploit trust. They impersonate banks, government agencies, health providers, and even family members. The one that scares me the most is the ability to use AI to mimic a loved one’s voice — anyone can be tricked by this.

Cognitive Load And Decision Fatigue In Older Users

Imagine navigating a confusing mobile app after a long day. Now imagine you’re in your 70s or 80s; your eyesight isn’t as sharp, your finger tapping isn’t as accurate, and every new screen feels like a puzzle.

As people age, they may experience slower processing speeds, reduced working memory, and lower tolerance for complexity. That means:

- Multistep processes are harder to follow.

- Unexpected changes in layout or behaviour can cause anxiety.

- Vague language increases confusion.

Decision fatigue hits harder, too. If a user has already made five choices on an app, they may click the 6th button without fully understanding what it does, especially if it seems to be part of the flow.

Scammers rely on these factors. However, good UX can help to reduce it.

The Digital Literacy Gap And Common Pain Points

There’s a big difference between someone who grew up with the internet and someone who started using it in their 60s. Older users often struggle with:

- Recognising safe vs. suspicious links;

- Differentiating between ads and actual content;

- Knowing how to verify sources;

- Understanding terms like “multi-factor authentication” or “phishing”.

They may also be more likely to blame themselves when something goes wrong, leading to underreporting and repeat victimization.

Design can help to bridge some of that gap. But only if we build with their experience in mind.

The Role UX Designers Can Play In Preventing Harm

As UX designers, we focus on making things easy, intuitive, and accessible. But we can also shape how people understand risk.

Every choice, from wording to layout to colour, can affect how users interpret safety cues. When we design for the right cues, we help users avoid mistakes. When we get them wrong or ignore them altogether, we leave people vulnerable.

The good news? We have tools. We have influence. And in a world where digital scams are rising, we can use both to design for protection, not just productivity.

UX As The First Line Of Defence

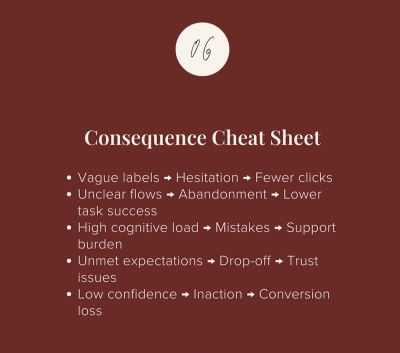

The list below describes some UX design improvements that we can consider as designers:

1. Clear, Simple Design As A Defence Mechanism

- Simple interfaces reduce user errors and scam risks.

- Use linear flows, fewer input fields, and clear, consistent instructions.

- Helps users feel confident and spot unusual activity.

2. Make Security Cues Obvious And Consistent

- Users rely on visible indicators: padlocks, HTTPS, and verification badges.

- Provide clear warnings for risky actions and unambiguous button labels.

3. Prioritize Clarity In Language

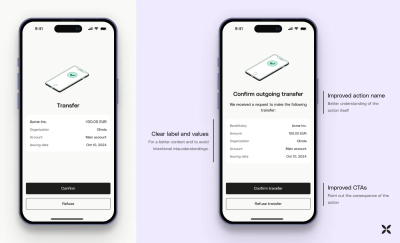

- Use plain, direct language for critical actions (e.g., “Confirm $400 transfer”).

- Avoid vague CTAs like “Continue” or playful labels like “Let’s go!”

- Clear language reduces uncertainty, especially for older users.

4. Focus On Accessibility And Readability

- Use minimum 16px fonts and high-contrast colour schemes.

- Provide clear spacing and headings to improve scanning.

- Accessibility benefits everyone, not just older users.

5. Use Friction To Protect, Not Hinder

- Intentional friction (e.g., verification steps or warnings) can prevent mistakes.

- Thoughtfully applied, it enhances safety without frustrating users.

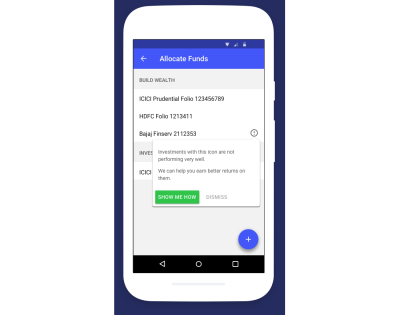

6. Embed Contextual Education

- Include just-in-time tips, tooltips, and passive alerts.

- Help users understand risks within the flow, not after the fact.

What Can’t UX Fix?

Let’s be realistic: UX isn’t magic. We can’t stop phishing emails from landing in someone’s inbox. We can’t rewrite bad policies, and we can’t always prevent users from clicking on a well-disguised trap.

I personally think that even good UX may be limited in helping people like my mother, who will never be tech-savvy. To help those like her, ultimately, additional elements like support contact numbers, face-to-face courses on how to stay safe on your phone, and, of course, help from family members as required. These are all about human contact touch points, which can never be replaced by any kind of digital or AI support that may be available.

What we can do as designers is build systems that make hesitation feel natural. We can provide visual clarity, reduce ambiguity, and inject small moments of friction that nudge users to double-check before proceeding, especially in financial and banking apps and websites.

That hesitation might be the safeguard we need.

Other Key Tips To Help Seniors Avoid Online Scams

1. Be Skeptical Of Unsolicited Communications

Scammers often pose as trusted entities like banks, government agencies, or tech support to trick individuals into revealing personal information. Avoid clicking on links or downloading attachments from unknown sources, and never share personal details like your Medicare number, passwords, or banking information unless you’ve verified the request independently.

2. Use Strong, Unique Passwords And Enable Two-Factor Authentication

Create complex passwords that combine letters, numbers, and symbols, and avoid reusing passwords across different accounts. Whenever possible, enable two-factor authentication (2FA) to add an extra layer of security to your online accounts.

3. Stay Informed About Common Scams

Educate yourself on prevalent scams targeting seniors, such as phishing emails, romance scams, tech support fraud, and investment schemes. Regularly consult trusted resources like the NCOA and Age UK for updates on new scam tactics and prevention strategies.

4. Verify Before You Act

If you receive a request for money or personal information, especially if it’s urgent, take a moment to verify its legitimacy. Contact the organization directly using official contact information, not the details provided in the suspicious message. Be particularly cautious with unexpected requests from supposed family members or friends.

5. Report Suspected Scams Promptly

If you believe you’ve encountered a scam, report it to the appropriate authorities. Reporting helps protect others and contributes to broader efforts to combat fraud.

For more comprehensive information and resources, consider exploring the following:

- National Council on Aging: 22 Tips for Seniors to Avoid Scams

- Age UK: Avoiding Scams Information Guide

- eSafety Commissioner: Online Scams for Seniors

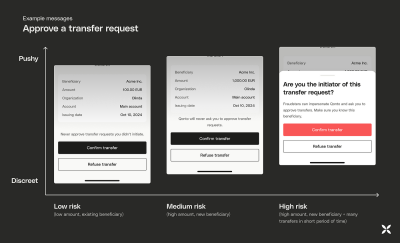

Examples Of Good Alert/Warning UX In Banking Platforms

I recall my mother not recognising a transaction in her banking app, and she thought that money was being taken from her account. It turns out that it was a legitimate transaction made in a local cafe, but the head office was located in a suburb she was not familiar with, which caused her to think it was fraudulent.

This kind of scenario could easily be addressed with a feature I have seen in the ING banking app (International Netherlands Group). You tap on the transaction to view more information about your transaction.

- ING bank: You can now select a transaction to get more information on the business.

- Banking apps like NAB (National Australia Bank) now interrupt suspicious transfers with messages like, “Have you spoken to this person on the phone? Scammers often pose as trusted contacts.” NAB said that December was the biggest month in 2024 for abandoned payments, with customers scrapping $26 million worth of payments after receiving a payment alert.

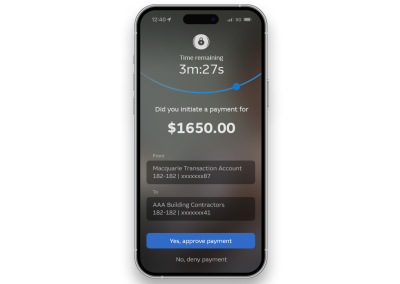

- Macquarie Bank has introduced additional prompts for bank transactions to confirm the user’s approval of all transactions.

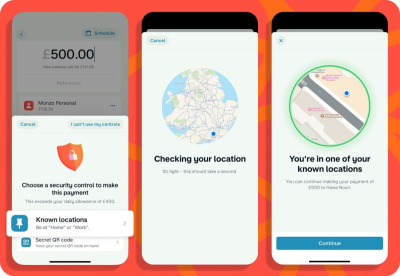

- Monzo Bank has added three security elements to reduce online fraud for banking transactions:

- Verified Locations: Sending or moving large amounts of money from locations that the account holder has marked as safe. This helps block fraudsters from accessing funds if they’re not near these trusted places.

- Trusted Approvers: For large transactions, a trusted contact must give the green light. This adds protection if their phone is stolen or if they want to safeguard someone who may be more vulnerable.

- Secure QR Codes: Account holders can generate a special QR code and keep it stored in a safe place. They scan it when needed to unlock extra layers of security.

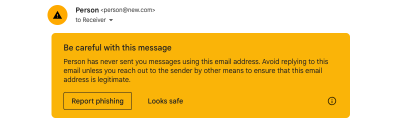

- Email platforms like Gmail highlight spoofed addresses or impersonation attempts with yellow banners and caution icons.

These interventions are not aimed at stopping users, but they can give them one last chance to rethink their transactions. That’s powerful.

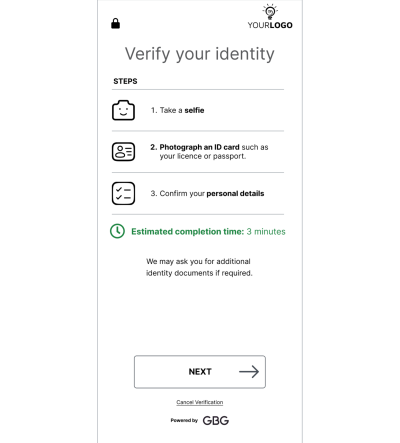

Finally, here’s an example of clear UX cues that streamline the experience and guide users through their journey with greater confidence and clarity.

Conclusion

Added security features in banking apps, like the examples above, aren’t just about preventing fraud; they’re examples of thoughtful UX design. These features are built to feel natural, not burdensome, helping users stay safe without getting overwhelmed. As UX professionals, we have a responsibility to design with protection in mind, anticipating threats and creating experiences that guide users away from risky actions. Good UX in financial products isn’t just seamless; it’s about security by design.

And in a world where digital deception is on the rise, protection is usability. Designers have the power and the responsibility to make interfaces that support safer choices, especially for older users, whose lives and life savings may depend on a single click.

Let’s stop thinking of security as a backend concern or someone else’s job. Let’s design systems that are scam-resistant, age-inclusive, and intentionally clear. And don’t forget to reach out with the additional human touch to help your older family members.

When it comes down to it, good UX isn’t just helpful — it can be life-changing.

(yk)

Meet Accessible UX Research, A Brand-New Smashing Book

Meet Accessible UX Research, A Brand-New Smashing Book Meet Accessible UX Research, A Brand-New Smashing Book Vitaly Friedman 2025-06-20T16:00:00+00:00 2025-06-25T15:04:30+00:00 UX research can take so much of the guesswork out of the design process! But it’s easy to forget just how different people are and […]

Accessibility

Meet Accessible UX Research, A Brand-New Smashing Book

Vitaly Friedman 2025-06-20T16:00:00+00:00

2025-06-25T15:04:30+00:00

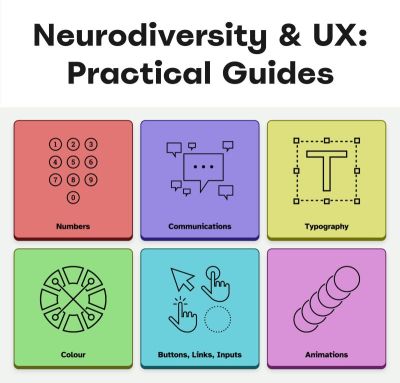

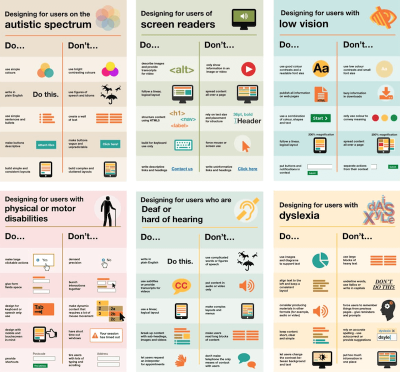

UX research can take so much of the guesswork out of the design process! But it’s easy to forget just how different people are and how their needs and preferences can vary. We can’t predict the needs of every user, but we shouldn’t expect different people using the product in roughly the same way. That’s how we end up with an incomplete, inaccurate, or simply wrong picture of our customers.

There is no shortage of accessibility checklists and guidelines. But accessibility isn’t a checklist. It doesn’t happen by accident. It’s a dedicated effort to include and consider and understand different needs of different users to make sure everyone can use our products successfully. That’s why we’ve teamed up with Michele A. Williams on a shiny new book around just that.

Meet Accessible UX Research, your guide to making UX research more inclusive of participants with different needs — from planning and recruiting to facilitation, asking better questions, avoiding bias, and building trust. Pre-order the book.

Print + eBook

{

“sku”: “accessible-ux-research”,

“type”: “Book”,

“price”: “44.00”,

“prices”: [{

“amount”: “44.00”,

“currency”: “USD”,

“items”: [

{“amount”: “34.00”, “type”: “Book”},

{“amount”: “10.00”, “type”: “E-Book”}

]

}, {

“amount”: “44.00”,

“currency”: “EUR”,

“items”: [

{“amount”: “34.00”, “type”: “Book”},

{“amount”: “10.00”, “type”: “E-Book”}

]

}

]

}

$

44.00

Quality hardcover. Free worldwide shipping starting in August 2025.

100 days money-back-guarantee.

eBook

{

“sku”: “accessible-ux-research-ebook”,

“type”: “E-Book”,

“price”: “19.00”,

“prices”: [{

“amount”: “19.00”,

“currency”: “USD”

}, {

“amount”: “19.00”,

“currency”: “EUR”

}

]

}

$

19.00

Free!

DRM-free, of course. ePUB, Kindle, PDF available for download later this summer.

Included with your Smashing Membership.

Get the eBook

Download PDF, ePUB, Kindle.

Thanks for being smashing! ❤️

About The Book

The book isn’t a checklist for you to complete as a part of your accessibility work. It’s a practical guide to inclusive UX research, from start to finish. If you’ve ever felt unsure how to include disabled participants, or worried about “getting it wrong,” this book is for you. You’ll get clear, practical strategies to make your research more inclusive, effective, and reliable.

Inside, you’ll learn how to:

- Plan research that includes disabled participants from the start,

- Recruit participants with disabilities,

- Facilitate sessions that work for a range of access needs,

- Ask better questions and avoid unintentionally biased research methods,

- Build trust and confidence in your team around accessibility and inclusion.

The book also challenges common assumptions about disability and urges readers to rethink what inclusion really means in UX research and beyond. Let’s move beyond compliance and start doing research that reflects the full diversity of your users. Whether you’re in industry or academia, this book gives you the tools — and the mindset — to make it happen.

High-quality hardcover. Written by Dr. Michele A. Williams. Cover art by Espen Brunborg. Print shipping in August 2025. eBook available for download later this summer. Pre-order the book.

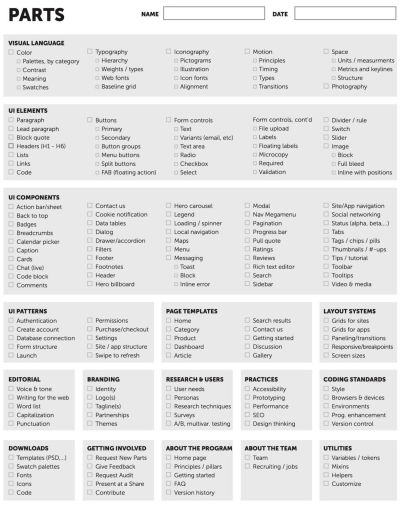

Contents

- Disability mindset: For inclusive research to succeed, we must first confront our mindset about disability, typically influenced by ableism.

- Diversity of disability: Accessibility is not solely about blind screen reader users; disability categories help us unpack and process the diversity of disabled users.

- Disability in the stages of UX research: Disabled participants can and should be part of every research phase — formative, prototype, and summative.

- Recruiting disabled participants: Recruiting disabled participants is not always easy, but that simply means we need to learn strategies on where to look.

- Designing your research: While our goal is to influence accessible products, our research execution must also be accessible.

- Facilitating an accessible study: Preparation and communication with your participants can ensure your study logistics run smoothly.

- Analyzing and reporting with accuracy and impact: How you communicate your findings is just as important as gathering them in the first place — so prepare to be a storyteller, educator, and advocate.

- Disability in the UX research field: Inclusion isn’t just for research participants, it’s important for our colleagues as well, as explained by blind UX Researcher Dr. Cynthia Bennett.

Who This Book Is For

Whether a UX professional who conducts research in industry or academia, or more broadly part of an engineering, product, or design function, you’ll want to read this book if…

- You have been tasked to improve accessibility of your product, but need to know where to start to facilitate this successfully.

- You want to establish a culture for accessibility in your company, but not sure how to make it work.

- You want to move from WCAG/EAA compliance to established accessibility practices and inclusion in research practices and beyond.

- You want to improve your overall accessibility knowledge and be viewed as an Accessibility Specialist for your organization.

Print + eBook

{

“sku”: “accessible-ux-research”,

“type”: “Book”,

“price”: “44.00”,

“prices”: [{

“amount”: “44.00”,

“currency”: “USD”,

“items”: [

{“amount”: “34.00”, “type”: “Book”},

{“amount”: “10.00”, “type”: “E-Book”}

]

}, {

“amount”: “44.00”,

“currency”: “EUR”,

“items”: [

{“amount”: “34.00”, “type”: “Book”},

{“amount”: “10.00”, “type”: “E-Book”}

]

}

]

}

$

44.00

Quality hardcover. Free worldwide shipping starting in August 2025.

100 days money-back-guarantee.

eBook

{

“sku”: “accessible-ux-research-ebook”,

“type”: “E-Book”,

“price”: “19.00”,

“prices”: [{

“amount”: “19.00”,

“currency”: “USD”

}, {

“amount”: “19.00”,

“currency”: “EUR”

}

]

}

$

19.00

Free!

DRM-free, of course. ePUB, Kindle, PDF available for download later this summer.

Included with your Smashing Membership.

Get the eBook

Download PDF, ePUB, Kindle.

Thanks for being smashing! ❤️

About the Author

Dr. Michele A. Williams is owner of M.A.W. Consulting, LLC – Making Accessibility Work. Her 20+ years of experience include influencing top tech companies as a Senior User Experience (UX) Researcher and Accessibility Specialist and obtaining a PhD in Human-Centered Computing focused on accessibility. An international speaker, published academic author, and patented inventor, she is passionate about educating and advising on technology that does not exclude disabled users.

Dr. Michele A. Williams is owner of M.A.W. Consulting, LLC – Making Accessibility Work. Her 20+ years of experience include influencing top tech companies as a Senior User Experience (UX) Researcher and Accessibility Specialist and obtaining a PhD in Human-Centered Computing focused on accessibility. An international speaker, published academic author, and patented inventor, she is passionate about educating and advising on technology that does not exclude disabled users.

Testimonials

“Accessible UX Research stands as a vital and necessary resource. In addressing disability at the User Experience Research layer, it helps to set an equal and equitable tone for products and features that resonates through the rest of the creation process. The book provides a solid framework for all aspects of conducting research efforts, including not only process considerations, but also importantly the mindset required to approach the work.

This is the book I wish I had when I was first getting started with my accessibility journey. It is a gift, and I feel so fortunate that Michele has chosen to share it with us all.”

Eric Bailey, Accessibility Advocate

“User research in accessibility is non-negotiable for actually meeting users’ needs, and this book is a critical piece in the puzzle of actually doing and integrating that research into accessibility work day to day.”

Devon Pershing, Author of The Accessibility Operations Guidebook

“Our decisions as developers and designers are often based on recommendations, assumptions, and biases. Usually, this doesn’t work, because checking off lists or working solely from our own perspective can never truly represent the depth of human experience. Michele’s book provides you with the strategies you need to conduct UX research with diverse groups of people, challenge your assumptions, and create truly great products.”

Manuel Matuzović, Author of the Web Accessibility Cookbook

“This book is a vital resource on inclusive research. Michele Williams expertly breaks down key concepts, guiding readers through disability models, language, and etiquette. A strong focus on real-world application equips readers to conduct impactful, inclusive research sessions. By emphasizing diverse perspectives and proactive inclusion, the book makes a compelling case for accessibility as a core principle rather than an afterthought. It is a must-read for researchers, product-makers, and advocates!”

Anna E. Cook, Accessibility and Inclusive Design Specialist

Technical Details

- ISBN: 978-3-910835-03-0 (print)

- Quality hardcover, stitched binding, ribbon page marker.

- Free worldwide airmail shipping from Germany starting in August 2025.

- eBook available for download as PDF, ePUB, and Amazon Kindle later this summer.

- Pre-order the book.

Community Matters ❤️

Producing a book takes quite a bit of time, and we couldn’t pull it off without the support of our wonderful community. A huge shout-out to Smashing Members for the kind, ongoing support. The eBook is and always will be free for Smashing Members as soon as it’s out. Plus, Members get a friendly discount when purchasing their printed copy. Just sayin’! 😉

More Smashing Books & Goodies

Promoting best practices and providing you with practical tips to master your daily coding and design challenges has always been (and will be) at the core of everything we do at Smashing.

In the past few years, we were very lucky to have worked together with some talented, caring people from the web community to publish their wealth of experience as printed books that stand the test of time. Addy, Heather, and Steven are three of these people. Have you checked out their books already?

Success at Scale

A deep dive into how production sites of different sizes tackle performance, accessibility, capabilities, and developer experience at scale.

Understanding Privacy

Everything you need to know to put your users first and make a better web.

Touch Design for Mobile Interfaces

Learn how touchscreen devices really work — and how people really use them.

(as, cm)

What I Wish Someone Told Me When I Was Getting Into ARIA

What I Wish Someone Told Me When I Was Getting Into ARIA What I Wish Someone Told Me When I Was Getting Into ARIA Eric Bailey 2025-06-16T13:00:00+00:00 2025-06-25T15:04:30+00:00 If you haven’t encountered ARIA before, great! It’s a chance to learn something new and exciting. If […]

Accessibility

What I Wish Someone Told Me When I Was Getting Into ARIA

Eric Bailey 2025-06-16T13:00:00+00:00

2025-06-25T15:04:30+00:00

If you haven’t encountered ARIA before, great! It’s a chance to learn something new and exciting. If you have heard of ARIA before, this might help you better understand it or maybe even teach you something new!

These are all things I wish someone had told me when I was getting started on my web accessibility journey. This post will:

- Provide a mindset for how to approach ARIA as a concept,

- Debunk some common misconceptions, and

- Provide some guiding thoughts to help you better understand and work with it.

It is my hope that in doing so, this post will help make an oft-overlooked yet vital corner of web design and development easier to approach.

What This Post Is Not

This is not a recipe book for how to use ARIA to build accessible websites and web apps. It is also not a guide for how to remediate an inaccessible experience. A lot of accessibility work is highly contextual. I do not know the specific needs of your project or organization, so trying to give advice here could easily do more harm than good.

Instead, think of this post as a “know before you go” guide. I’m hoping to give you a good headspace to approach ARIA, as well as highlight things to watch out for when you undertake your journey. So, with that out of the way, let’s dive in!

So, What Is ARIA?

ARIA is what you turn to if there is not a native HTML element or attribute that is better suited for the job of communicating interactivity, purpose, and state.

Think of it like a spice that you sprinkle into your markup to enhance things.

Adding ARIA to your HTML markup is a way of providing additional information to a website or web app for screen readers and voice control software.

- Interactivity means the content can be activated or manipulated. An example of this is navigating to a link’s destination.

- Purpose means what something is used for. An example of this is a text input used to collect someone’s name.

- State means the current status content has been placed in and controlled by states, properties, and values. An example of this is an accordion panel that can either be expanded or collapsed.

Here is an illustration to help communicate what I mean by this:

- The presence of HTML’s

buttonelement will instruct assistive technology to report it as a button, letting someone know that it can be activated to perform a predefined action. - The presence of the text string “Mute” will be reported by assistive technology to clue the person into what the button is used for.

- The presence of

aria-pressed="true"means that someone or something has previously activated the button, and it is now in a “pushed in” state that sustains its action.

This overall pattern will let people who use assistive technology know:

- If something is interactive,

- What kind of interactive behavior it performs, and

- Its current state.

ARIA’s History

ARIA has been around for a long time, with the first version published on September 26th, 2006.

ARIA was created to provide a bridge between the limitations of HTML and the need for making interactive experiences understandable by assistive technology.

“

The latest version of ARIA is version 1.2, published on June 6th, 2023. Version 1.3 is slated to be released relatively soon, and you can read more about it in this excellent article by Craig Abbott.

You may also see it referred to as WAI-ARIA, where WAI stands for “Web Accessibility Initiative.” The WAI is part of the W3C, the organization that sets standards for the web. That said, most accessibility practitioners I know call it “ARIA” in written and verbal communication and leave out the “WAI-” part.

The Spirit Of ARIA Reflects The Era In Which It Was Created

The reason for this is simple: The web was a lot less mature in the past than it is now. The most popular operating system in 2006 was Windows XP. The iPhone didn’t exist yet; it was released a year later.

From a very high level, ARIA is a snapshot of the operating system interaction paradigms of this time period. This is because ARIA recreates them.

The Mindset

Smartphones with features like tappable, swipeable, and draggable surfaces were far less commonplace. Single Page Application “web app” experiences were also rare, with Ajax-based approaches being the most popular. This means that we have to build the experiences of today using the technology of 2006. In a way, this is a good thing. It forces us to take new and novel experiences and interrogate them.

Interactions that cannot be broken down into smaller, more focused pieces that map to ARIA patterns are most likely inaccessible. This is because they won’t be able to be operated by assistive technology or function on older or less popular devices.

I may be biased, but I also think these sorts of novel interactions that can’t translate also serve as a warning that a general audience will find them to be confusing and, therefore, unusable. This belief is important to consider given that the internet serves:

- An unknown number of people,

- Using an unknown number of devices,

- Each with an unknown amount of personal customizations,

- Who have their own unique needs and circumstances and

- Have unknown motivational factors.

Interaction Expectations

Contemporary expectations for keyboard-based interaction for web content — checkboxes, radios, modals, accordions, and so on — are sourced from Windows XP and its predecessor operating systems. These interaction models are carried forward as muscle memory for older people who use assistive technology. Younger people who rely on assistive technology also learn these de facto standards, thus continuing the cycle.

What does this mean for you? Someone using a keyboard to interact with your website or web app will most likely try these Windows OS-based keyboard shortcuts first. This means things like pressing:

- Enter to navigate to a link’s destination,

- Space to activate buttons,

- Home and End to jump to the start or end of a list of items, and so on.

It’s Also A Living Document

This is not to say that ARIA has stagnated. It is constantly being worked on with new additions, removals, and clarifications. Remember, it is now at version 1.2, with version 1.3 arriving soon.

In parallel, HTML as a language also reflects this evolution. Elements were originally created to support a document-oriented web and have been gradually evolving to support more dynamic, app-like experiences. The great bit here is that this is all conducted in the open and is something you can contribute to if you feel motivated to do so.

ARIA Has Rules For Using It

There are five rules included in ARIA’s documentation to help steer how you approach it:

- Use a native element whenever possible.

An example would be using an anchor element (<a>) for a link rather than adivwith a click handler and aroleoflink. - Don’t adjust a native element’s semantics if at all possible.

An example would be trying to use a heading element as a tab rather than wrapping the heading in a semantically neutraldiv. - Anything interactive has to be keyboard operable.

If you can’t use it with a keyboard, it isn’t accessible. Full stop. - Do not use

role="presentation"oraria-hidden="true"on a focusable element.

This makes something intended to be interactive unable to be used by assistive technology. - Interactive elements must be named.

An example of this is using the text string “Print” for abuttonelement.

Observing these five rules will do a lot to help you out. The following is more context to provide even more support.

ARIA Has A Taxonomy

There is a structured grammar to ARIA, and it is centered around roles, as well as states and properties.

Roles

A Role is what assistive technology reads and then announces. A lot of people refer to this in shorthand as semantics. HTML elements have implied roles, which is why an anchor element will be announced as a link by screen readers with no additional work.

Implied roles are almost always better to use if the use case calls for them. Recall the first rule of ARIA here. This is usually what digital accessibility practitioners refer to when they say, “Just use semantic HTML.”

There are many reasons for favoring implied roles. The main consideration is better guarantees of support across an unknown number of operating systems, browsers, and assistive technology combinations.

Roles have categories, each with its own purpose. The Abstract role category is notable in that it is an organizing supercategory not intended to be used by authors:

Abstract roles are used for the ontology. Authors MUST NOT use abstract roles in content.

<!-- This won't work, don't do it -->

<h2 role="sectionhead">

Anatomy and physiology

</h2>

<!-- Do this instead -->

<section aria-labeledby="anatomy-and-physiology">

<h2 id="anatomy-and-physiology">

Anatomy and physiology

</h2>

</section>

Additionally, in the same way, you can only declare ARIA on certain things, you can only declare some ARIA as children of other ARIA declarations. An example of this is the the listitem role, which requires a role of list to be present on its parent element.

So, what’s the best way to determine if a role requires a parent declaration? The answer is to review the official definition.

States And Properties

States and properties are the other two main parts of ARIA‘s overall taxonomy.

Implicit roles are provided by semantic HTML, and explicit roles are provided by ARIA. Both describe what an element is. States describe that element’s characteristics in a way that assistive technology can understand. This is done via property declarations and their companion values.

ARIA states can change quickly or slowly, both as a result of human interaction as well as application state. When the state is changed as a result of human interaction, it is considered an “unmanaged state.” Here, a developer must supply the underlying JavaScript logic to control the interaction.

When the state changes as a result of the application (e.g., operating system, web browser, and so on), this is considered “managed state.” Here, the application automatically supplies the underlying logic.

How To Declare ARIA

Think of ARIA as an extension of HTML attributes, a suite of name/value pairs. Some values are predefined, while others are author-supplied:

For the examples in the previous graphic, the polite value for aria-live is one of the three predefined values (off, polite, and assertive). For aria-label, “Save” is a text string manually supplied by the author.

You declare ARIA on HTML elements the same way you declare other attributes:

<!--

Applies an id value of

"carrot" to the div

-->

<div id="carrot"></div>

<!--

Hides the content of this paragraph

element from assistive technology

-->

<p aria-hidden="true">

Assistive technology can't read this

</p>

<!--

Provides an accessible name of "Stop",

and also communicates that the button

is currently pressed. A type property

with a value of "button" prevents

browser form submission.

-->

<button

aria-label="Stop"

aria-pressed="true"

type="button">

<!-- SVG icon -->

</button>

Other usage notes:

- You can place more than one ARIA declaration on an HTML element.

- The order of placement of ARIA when declared on an HTML element does not matter.

- There is no limit to how many ARIA declarations can be placed on an element. Be aware that the more you add, the more complexity you introduce, and more complexity means a larger chance things may break or not function as expected.

- You can declare ARIA on an HTML element and also have other non-ARIA declarations, such as

classorid. The order of declarations does not matter here, either.

It might also be helpful to know that boolean attributes are treated a little differently in ARIA when compared to HTML. Hidde de Vries writes about this in his post, “Boolean attributes in HTML and ARIA: what’s the difference?”.

Not A Whole Lot Of ARIA Is “Hardcoded”

In this context, “hardcoding” means directly writing a static attribute or value declaration into your component, view, or page.

A lot of ARIA is designed to be applied or conditionally modified dynamically based on application state or as a response to someone’s action. An example of this is a show-and-hide disclosure pattern:

- ARIA’s

aria-expandedattribute is toggled fromfalsetotrueto communicate if the disclosure is in an expanded or collapsed state. - HTML’s

hiddenattribute is conditionally removed or added in tandem to show or hide the disclosure’s full content area.

<div class="disclosure-container">

<button

aria-expanded="false"

class="disclosure-toggle"

type="button">

How we protect your personal information

</button>

<div

hidden

class="disclosure-content">

<ul>

<li>Fast, accurate, thorough and non-stop protection from cyber attacks</li>

<li>Patching practices that address vulnerabilities that attackers try to exploit</li>

<li>Data loss prevention practices help to ensure data doesn't fall into the wrong hands</li>

<li>Supply risk management practices help ensure our suppliers adhere to our expectations</li>

</ul>

<p>

<a href="/security/">Learn more about our security best practices</a>.

</p>

</div>

</div>

A common example of a hardcoded ARIA declaration you’ll encounter on the web is making an SVG icon inside a button decorative:

<button type="button>

<svg aria-hidden="true">

<!-- SVG code -->

</svg>

Save

</button>

Here, the string “Save” is what is required for someone to understand what the button will do when they activate it. The accompanying icon helps that understanding visually but is considered redundant and therefore decorative.

Declaring An Aria Role On Something That Already Uses That Role Implicitly Does Not Make It “Extra” Accessible

An implied role is all you need if you’re using semantic HTML. Explicitly declaring its role via ARIA does not confer any additional advantages.

<!--

You don't need to declare role="button" here.

Using the <button> element will make assistive

technology announce it as a button. The

role="button" declaration is redundant.

-->

<button role="button">

Save

</button>

You might occasionally run into these redundant declarations on HTML sectioning elements, such as <main role="main">, or <footer role="contentinfo">. This isn’t needed anymore, and you can just use the <main> or <footer> elements.

The reason for this is historic. These declarations were done for support reasons, in that it was a stop-gap technique for assistive technology that needed to be updated to support these new-at-the-time HTML elements.

Contemporary assistive technology does not need these redundant declarations. Think of it the same way that we don’t have to use vendor prefixes for the CSS border-radius property anymore.

Note: There is an exception to this guidance. There are circumstances where certain complex and complicated markup patterns don’t work as expected for assistive technology. In these cases, we want to hardcode the implicit role as explicit ARIA to ensure it works. This assistive technology support concern is covered in more detail later in this post.

You Don’t Need To Say What A Control Is; That Is What Roles Are For

Both implicit and explicit roles are announced by screen readers. You don’t need to include that part for things like the interactive element’s text string or an aria-label.

<!-- Don't do this -->

<button

aria-label="Save button"

type="button">

<!-- Icon SVG -->

</button>

<!-- Do this instead -->

<button

aria-label="Save"

type="button">

<!-- Icon SVG -->

</button>

Had we used the string value of “Save button” for our Save button, a screen reader would announce it along the lines of, “Save button, button.” That’s redundant and confusing.

ARIA Roles Have Very Specific Meanings

We sometimes refer to website and web app navigation colloquially as menus, especially if it’s an e-commerce-style mega menu.

In ARIA, menus mean something very specific. Don’t think of global or in-page navigation or the like. Think of menus in this context as what appears when you click the Edit menu button on your application’s menubar.

Using a role improperly because its name seems like an appropriate fit at first glance creates confusion for people who do not have the context of the visual UI. Their expectations will be set with the announcement of the role, then subverted when it does not act the way it is supposed to.

Imagine if you click on a link, and instead of taking you to another webpage, it sends something completely unrelated to your printer instead. It’s sort of like that.

Declaring role="menu" is a common example of a misapplied role, but there are others. The best way to know what a role is used for? Go straight to the source and read up on it.

Certain Roles Are Forbidden From Having Accessible Names

These roles are caption, code, deletion, emphasis, generic, insertion, paragraph, presentation, strong, subscript, and superscript.

This means you can try and provide an accessible name for one of these elements — say via aria-label — but it won’t work because it’s disallowed by the rules of ARIA’s grammar.

<!-- This won't work-->

<strong aria-label="A 35% discount!">

$39.95

</strong>

<!-- Neither will this -->

<code title="let JavaScript example">

let submitButton = document.querySelector('button[type="submit"]');

</code>

For these examples, recall that the role is implicit, sourced from the declared HTML element.

Note here that sometimes a browser will make an attempt regardless and overwrite the author-specified string value. This overriding is a confusing act for all involved, which led to the rule being established in the first place.

You Can’t Make Up ARIA And Expect It To Work

I’ve witnessed some developers guess-adding CSS classes, such as .background-red or .text-white, to their markup and being rewarded if the design visually updates correctly.

The reason this works is that someone previously added those classes to the project. With ARIA, the people who add the content we can use are the Accessible Rich Internet Applications Working Group. This means each new version of ARIA has a predefined set of properties and values. Assistive technology is then updated to parse those attributes and values, although this isn’t always a guarantee.

Declaring ARIA, which isn’t part of that predefined set, means assistive technology won’t know what it is and consequently won’t announce it.

<!--

There is no "selectpanel" role in ARIA.

Because of this, this code will be announced

as a button and not as a select panel.

-->

<button

role="selectpanel"

type="button">

Choose resources

</button>

ARIA Fails Silently

This speaks to the previous section, where ARIA won’t understand words spoken to it that exist outside its limited vocabulary.

There are no console errors for malformed ARIA. There’s also no alert dialog, beeping sound, or flashing light for your operating system, browser, or assistive technology. This fact is yet another reason why it is so important to test with actual assistive technology.

You don’t have to be an expert here, either. There is a good chance your code needs updating if you set something to announce as a specific state and assistive technology in its default configuration does not announce that state.

ARIA Only Exposes The Presence Of Something To Assistive Technology

Applying ARIA to something does not automatically “unlock” capabilities. It only sends a hint to assistive technology about how the interactive content should behave.

For assistive technology like screen readers, that hint could be for how to announce something. For assistive technology like refreshable Braille displays, it could be for how it raises and lowers its pins. For example, declaring role="button" on a div element does not automatically make it clickable. You will still need to:

- Target the

divelement in JavaScript, - Tie it to a click event,

- Author the interactive logic that it performs when clicked, and then

- Accommodate all the other expected behaviors.

This all makes me wonder why you can’t save yourself some work and use a button element in the first place, but that is a different story for a different day.

Additionally, adjusting an element’s role via ARIA does not modify the element’s native functionality. For example, you can declare role="image" on a div element. However, attempting to declare the alt or src attributes on the div won’t work. This is because alt and src are not supported attributes for div.

Declaring an ARIA Role On Something Will Override Its Semantics, But Not Its Behavior

This speaks to the previous section on ARIA only exposing something’s presence. Don’t forget that certain HTML elements have primary and secondary interactive capabilities built into them.

For example, an anchor element’s primary capability is navigating to whatever URL value is provided for its href attribute. Secondary capabilities for an anchor element include copying the URL value, opening it in a new tab or incognito window, and so on.

These secondary capabilities are still preserved. However, it may not be apparent to someone that they can use them — or use them in the way that they’d expect — depending on what is announced.

The opposite is also true. When an element has no capabilities, having its role adjusted does not grant it any new abilities. Remember, ARIA only announces. This is why that div with a role of button assigned to it won’t do anything when clicked if no companion JavaScript logic is also present.

You Will Need To Declare ARIA To Make Certain Interactions Accessible

A lot of the previous content may make it seem like ARIA is something you should avoid using altogether. This isn’t true. Know that this guidance is written to help steer you to situations where HTML does not offer the capability to describe an interaction out of the box. This space is where you want to use ARIA.

Knowing how to identify this area requires spending some time learning what HTML elements there are, as well as what they are and are not used for. I quite like HTML5 Doctor’s Element Index for upskilling on this.

Certain ARIA States Require Certain ARIA Roles To Be Present

This is analogous to how HTML has both global attributes and attributes that can only be used on a per-element basis. For example, aria-describedby can be used on any HTML element or role. However, aria-posinset can only be used with article, comment, listitem, menuitem, option, radio, row, and tab roles. Remember here that these roles can be provided by either HTML or ARIA.

Learning what states require which roles can be achieved by reading the official reference. Check for the “Used in Roles” portion of each entry’s characteristics:

aria-setsize. (Large preview)

Automated code scanners — like axe, WAVE, ARC Toolkit, Pa11y, equal-access, and so on — can catch this sort of thing if they are written in error. I’m a big fan of implementing these sorts of checks as part of a continuous integration strategy, as it makes it a code quality concern shared across the whole team.

ARIA Is More Than Web Browsers

Speaking of technology that listens, it is helpful to know that the ARIA you declare instructs the browser to speak to the operating system the browser is installed on. Assistive technology then listens to what the operating system reports. It then communicates that to the person using the computer, tablet, smartphone, and so on.

A person can then instruct assistive technology to request the operating system to take action on the web content displayed in the browser.

This interaction model is by design. It is done to make interaction from assistive technology indistinguishable from interaction performed without assistive technology.

There are a few reasons for this approach. The most important one is it helps preserve the privacy and autonomy of the people who rely on assistive technologies.

Just Because It Exists In The ARIA Spec Does Not Mean Assistive Technology Will Support It

This support issue was touched on earlier and is a difficult fact to come to terms with.

Contemporary developers enjoy the hard-fought, hard-won benefits of the web standards movement. This means you can declare HTML and know that it will work with every major browser out there. ARIA does not have this. Each assistive technology vendor has its own interpretation of the ARIA specification. Oftentimes, these interpretations are convergent. Sometimes, they’re not.

Assistive technology vendors also have support roadmaps for their products. Some assistive technology vendors:

- Will eventually add support,

- May never, and some

- Might do so in a way that contradicts how other vendors choose to implement things.

There is also the operating system layer to contend with, which I’ll cover in more detail in a little bit. Here, the mechanisms used to communicate with assistive technology are dusty, oft-neglected areas of software development.

With these layers comes a scenario where the assistive technology can support the ARIA declared, but the operating system itself cannot communicate the ARIA’s presence, or vice-versa. The reasons for this are varied but ultimately boil down to a historic lack of support, prioritization, and resources. However, I am optimistic that this is changing.

Additionally, there is no equivalent to Caniuse, Baseline, or Web Platform Status for assistive technology. The closest analog we have to support checking resources is a11ysupport.io, but know that it is the painstaking work of a single individual. Its content may not be up-to-date, as the work is both Herculean in its scale and Sisyphean in its scope. Because of this, I must re-stress the importance of manually testing with assistive technology to determine if the ARIA you use works as intended.

How To Determine ARIA Support

There are three main layers to determine if something is supported:

- Operating system and version.

- Assistive technology and version,

- Browser and browser version.

1. Operating System And Version

Each operating system (e.g., Windows, macOS, Linux) has its own way of communicating what content is present to assistive technology. Each piece of assistive technology has to accommodate how to parse that communication.

Some assistive technology is incompatible with certain operating systems. An example of this is not being able to use VoiceOver with Windows, or JAWS with macOS. Furthermore, each version of each operating system has slight variations in what is reported and how. Sometimes, the operating system needs to be updated to “teach” it the updated AIRA vocabulary. Also, do not forget that things like bugs and regressions can occur.

2. Assistive Technology And Version

There is no “one true way” to make assistive technology. Each one is built to address different access needs and wants and is done so in an opinionated way — think how different web browsers have different features and UI.

Each piece of assistive technology that consumes web content has its own way of communicating this information, and this is by design. It works with what the operating system reports, filtered through things like heuristics and preferences.

aria-label. (Large preview)

Like operating systems, assistive technology also has different versions with what each version is capable of supporting. They can also be susceptible to bugs and regressions.

Another two factors worth pointing out here are upgrade hesitancy and lack of financial resources. Some people who rely on assistive technology are hesitant to upgrade it. This is based on a very understandable fear of breaking an important mechanism they use to interact with the world. This, in turn, translates to scenarios like holding off on updates until absolutely necessary, as well as disabling auto-updating functionality altogether.

Lack of financial resources is sometimes referred to as the disability or crip tax. Employment rates tend to be lower for disabled populations, and with that comes less money to spend on acquiring new technology and updating it. This concern can and does apply to operating systems, browsers, and assistive technology.

3. Browser And Browser Version

Some assistive technology works better with one browser compared to another. This is due to the underlying mechanics of how the browser reports its content to assistive technology. Using Firefox with NVDA is an example of this.

Additionally, the support for this reporting sometimes only gets added for newer versions. Unfortunately, it also means support can sometimes accidentally regress, and people don’t notice before releasing the browser update — again, this is due to a historic lack of resources and prioritization.

The Less Commonly-Used The ARIA You Declare, The Greater The Chance You’ll Need To Test It

Common ARIA declarations you’ll come across include, but are not limited to:

aria-label,aria-labelledby,aria-describedby,aria-hidden,aria-live.

These are more common because they’re more supported. They are more supported because many of these declarations have been around for a while. Recall the previous section that discussed actual assistive technology support compared to what the ARIA specification supplies.

Newer, more esoteric ARIA, or historically deprioritized declarations, may not have that support yet or may never. An example of how complicated this can get is aria-controls.

aria-controls is a part of ARIA that has been around for a while. JAWS had support for aria-controls, but then removed it after user feedback. Meanwhile, every other screen reader I’m aware of never bothered to add support.

What does that mean for us? Determining support, or lack thereof, is best accomplished by manual testing with assistive technology.

The More ARIA You Add To Something, The Greater The Chance Something Will Behave Unexpectedly

This fact takes into consideration the complexities in preferences, different levels of support, bugs, regressions, and other concerns that come with ARIA’s usage.

Philosophically, it’s a lot like adding more interactive complexity to your website or web app via JavaScript. The larger the surface area your code covers, the bigger the chance something unintended happens.

Consider the amount of ARIA added to a component or discrete part of your experience. The more of it there is declared nested into the Document Object Model (DOM), the more it interacts with parent ARIA declarations. This is because assistive technology reads what the DOM exposes to help determine intent.

A lot of contemporary development efforts are isolated, feature-based work that focuses on one small portion of the overall experience. Because of this, they may not take this holistic nesting situation into account. This is another reason why — you guessed it — manual testing is so important.

Anecdotally, WebAIM’s annual Millions report — an accessibility evaluation of the top 1,000,000 websites — touches on this phenomenon:

Increased ARIA usage on pages was associated with higher detected errors. The more ARIA attributes that were present, the more detected accessibility errors could be expected. This does not necessarily mean that ARIA introduced these errors (these pages are more complex), but pages typically had significantly more errors when ARIA was present.

Assistive Technology May Support Your Invalid ARIA Declaration

There is a chance that ARIA, which is authored inaccurately, will actually function as intended with assistive technology. While I do not recommend betting on this fact to do your work, I do think it is worth mentioning when it comes to things like debugging.

This is due to the wide range of familiarity there is with people who author ARIA.

Some of the more mature assistive technology vendors try to accommodate the lower end of this familiarity. This is done in order to better enable the people who use their software to actually get what they need.

There isn’t an exhaustive list of what accommodations each piece of assistive technology has. Think of it like the forgiving nature of a browser’s HTML parser, where the ultimate goal is to render content for humans.

aria-label Is Tricky

aria-label is one of the most common ARIA declarations you’ll run across. It’s also one of the most misused.

aria-label can’t be applied to non-interactive HTML elements, but oftentimes is. It can’t always be translated and is oftentimes overlooked for localization efforts. Additionally, it can make things frustrating to operate for people who use voice control software, where the visible label differs from what the underlying code uses.

Another problem is when it overrides an interactive element’s pre-existing accessible name. For example:

<!-- Don't do this -->

<a

aria-label="Our services"

href="/services/">

Services

</a>

This is a violation of WCAG Success Criterion 2.5.3: Label in Name, pure and simple. I have also seen it used as a way to provide a control hint. This is also a WCAG failure, in addition to being an antipattern:

<!-- Also don't do this -->

<a

aria-label="Click this link to learn more about our unique and valuable services"

href="/services/">

Services

</a>

These factors — along with other considerations — are why I consider aria-label a code smell.

aria-live Is Even Trickier

Live region announcements are powered by aria-live and are an important part of communicating updates to an experience to people who use screen readers.

Believe me when I say that getting aria-live to work properly is tricky, even under the best of scenarios. I won’t belabor the specifics here. Instead, I’ll point you to “Why are my live regions not working?”, a fantastic and comprehensive article published by TetraLogical.

The ARIA Authoring Practices Guide Can Lead You Astray

Also referred to as the APG, the ARIA Authoring Practices Guide should be treated with a decent amount of caution.

The Downsides

The guide was originally authored to help demonstrate ARIA’s capabilities. As a result, its code examples near-exclusively, overwhelmingly, and disproportionately favor ARIA.

Unfortunately, the APG’s latest redesign also makes it far more approachable-looking than its surrounding W3C documentation. This is coupled with demonstrating UI patterns in a way that signals it’s a self-serve resource whose code can be used out of the box.